AI Agent Workforce

- Gigi Mathews

- Sep 1, 2025

- 4 min read

Updated: Sep 6, 2025

AI Agent Workforce

Many great inventions evolve over time and find their practical use years or even decades after their first appearance. This is particularly true for general-purpose technology, which has broad applications in different sectors, and importantly, technology that delivers large increases in productivity. Think of steam power, electricity and the internet. Such technologies, although brilliant innovations from their inception, may wander for a while in search of problems to solve.

The Phonograph

Take for example the phonograph. Invented by the ever practical and visionary Thomas Edison in 1877, it was initially used as a dictating machine for offices in 1888. Only in 1896 did the phonograph prove to be a popular form of entertainment as a recording device and the first home audio device.

Here are few of the future uses Edison offered for phonograph in the North American Review shortly after its invention in June 1878:

Letter writing and all kinds of dictation without the aid of a stenographer.

Phonographic books, which will speak to blind people without effort on their part.

Reproduction of music.

The "family record," a registry of sayings, reminiscences, etc. by members of a family in their own voices, and of the last words of dying persons.

Clocks that should announce in articulate speech the time for going home, going to meals, etc.

The preservation of languages by exact reproduction of the manner of pronouncing.

Educational purposes; such as preserving the explanations made by a teacher, so that the pupil can refer to them at any moment, and spelling or other lessons placed upon the phonograph for convenience in committing to memory.

Connection with the telephone, so as to make that instrument an auxiliary in the transmission of permanent and invaluable records, instead of being the recipient of momentary and fleeting communication.

The Internet

Similarly, it took the internet 20-30 years from its inception in 1969 to progress through the development of TCP/IP, enabling interconnected networks that formed the global internet.

Then the 1990s saw the creation of the World Wide Web by Tim Berners-Lee, making the internet accessible with graphical browsers like Mosaic and Netscape, and leading to rapid commercialization.

The 2000s introduced Web 2.0, fostering social media and user-generated content. Ongoing developments include mobile internet, AI integration, and the emerging concepts of Web 3.0 and the metaverse.

Evolution of AI Language Models

Large language models (LLMs) too have a fascinating history that dates back to the 1960s with the creation of the first-ever chatbot, Eliza. Designed by MIT researcher Joseph Weizenbaum, Eliza marked the beginning of research into natural language processing (NLP) and the development of more sophisticated LLMs.

In 2011, Google Brain was launched, providing researchers advanced features including word embedding, which paved the way for massive advancements in the field that introduced transformer models in 2017.

Although much of the digital world has enthusiastically embraced GPT models for summarizing text summary and generating chats, images and videos, productivity improvement and economic value seem elusive and raise genuine skepticism about the huge investments made in this field. However, history points us to the evolution of general-purpose technology that LLMs and generative AI are following.

Agentic AI

Agentic AI takes the step toward workflow automation and productivity improvements that we’ve been awaiting. Agentic AI refers to artificial intelligence systems capable of reasoning a request, making decisions, planning and acting independently to achieve a defined goal, often performing multistep workflows without constant human oversight.

While Chat GPT-type generative AI focuses on creating new content, agentic AI goes a step further by executing actions, automating complex tasks, and integrating with various tools to bridge the gaps between knowledge and execution.

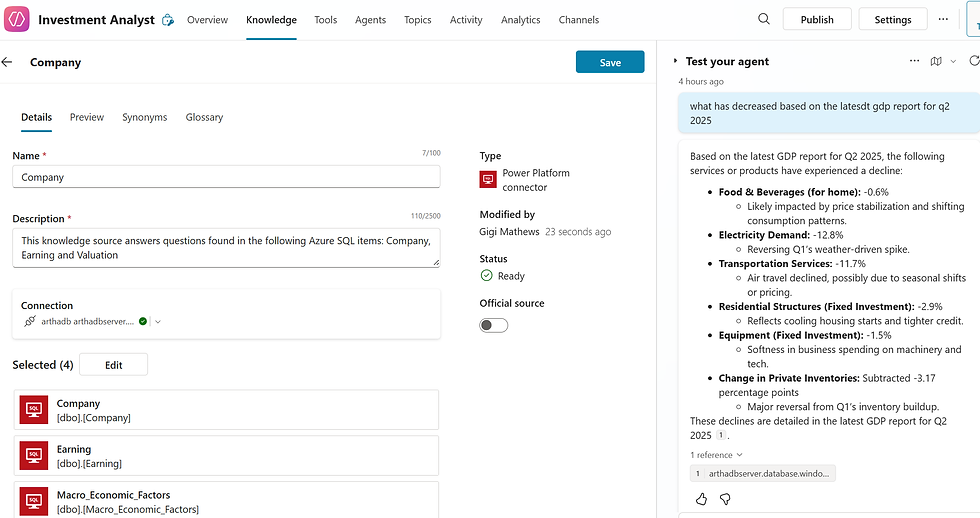

Retrieval augmented generation (RAG), an important new concept, supplements your prompt with additional context retrieved from an external knowledge repository of documents or databases. RAG helps generative AI create a response specific to your topic and reduce hallucination. The knowledgebase mentioned in the flow above is used as RAG, retrieving additional information and directing the model to generate content specific to your query.

Here is an example response to my question about specific elements of a GDP report the model retrieved:

Investment Opportunities

As agentic AI continues to advance, we've seen a notable sell-off in software companies. It began with Adobe, which was affected by the rise of generative AI models for image and video creation, and has now reached companies such as Salesforce and ServiceNow. But generating videos for fun is very different from doing so in an enterprise setting — where intellectual property rights, accuracy, security and specific use cases add layers of complexity.

Agentic AI may execute tasks indeterministically, with minimal input, but actions like creating a contact entry, interacting with tools or updating a service ticket based on a meeting outcome still require deterministic workflows and data defined by software. These tools aren't being replaced — they're being enhanced.

What we're witnessing is transformational. But AI isn't replacing jobs or software; it's improving response time and quality. And that’s a big deal.

Disclosure: The author of this article holds shares in Google, Salesforce and Adobe. This article is intended solely for informational and educational purposes and should not be considered personalized investment advice.

Comments